Bridging the ‘Space Between’ in Generative Video

New research from China has introduced an improved method of interpolating the gap between two temporally-distanced video frames, a crucial challenge in the ongoing pursuit of realism in generative AI video and video codec compression.

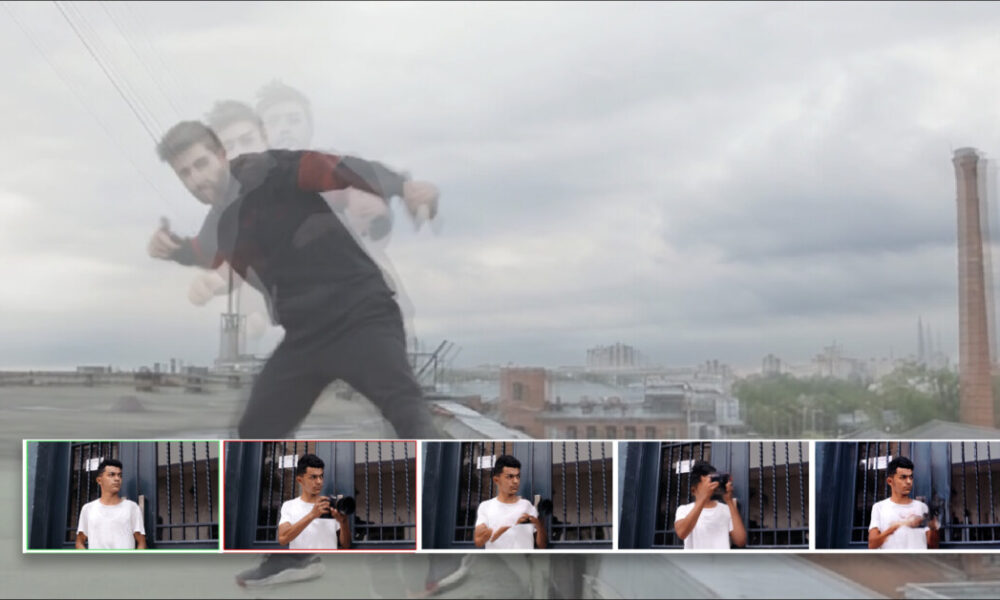

In the world of generative video, one of the key tasks is to bridge the gap between two still frames, predicting how the subject in the images would transition from one frame to the next. This process, known as tweening, is essential for creating smooth and logical transitions in animations.

The latest method developed by Chinese researchers, called Frame-wise Conditions-driven Video Generation (FCVG), has shown promising results in providing seamless transitions between frames. In comparison, other frameworks such as Google’s Frame Interpolation for Large Motion (FILM), Time Reversal Fusion (TRF), and Generative Inbetweening (GI) have struggled to accurately interpret and predict motion between frames.

FCVG’s approach offers a more credible and realistic solution, although there are still some artifacts such as unwanted morphing of hands and facial features. Despite its imperfections, FCVG represents a significant advancement in the field of generative video.

The ability to interpolate between frames is crucial for maintaining temporal consistency in generative video. Without accurate interpolation, generative systems may introduce inconsistencies and distortions in the video content. Commercial systems like RunwayML have demonstrated effective inbetweening capabilities, but closed-source solutions limit the ability to advance the open-source state of the art in generative video.

Overall, bridging the ‘space between’ in generative video is a challenging task that requires innovative approaches and continuous improvement. The development of methods like FCVG represents a step forward in the quest for realistic and seamless generative video content. Bridging the ‘Space Between’ in Generative Video: A Breakthrough in Video Generation Technology

In the ever-evolving landscape of computer vision research, the quest for superior rendering and video generation capabilities has been a continuous endeavor. Recent advancements in this field have brought forth new methods and techniques that aim to bridge the gap between existing technologies and the desired outcomes in generative video production.

One such groundbreaking development is the introduction of a new method known as Generative Inbetweening through Frame-wise Conditions-Driven Video Generation (FCVG). This innovative approach, developed by a team of researchers from the Harbin Institute of Technology and Tianjin University in China, addresses the inherent challenges and limitations in video interpolation tasks.

The core concept behind FCVG lies in the utilization of frame-wise conditions to guide the video generation process. By breaking down the creation of interstitial frames into smaller sub-tasks and providing explicit conditions for each frame, FCVG aims to ensure more stable and consistent output. This approach helps to reduce ambiguity in video generation and leads to smoother transitions between individual frames.

Comparing FCVG to traditional time-reversal methods, the authors highlight the advantages of their approach in terms of reducing ambiguity and improving the overall quality of generated videos. By combining information from both forward and backward directions and utilizing pre-trained models such as GlueStick for line-matching, FCVG offers a more refined and iterative process for inbetweening frames.

Furthermore, FCVG addresses the challenges posed by unlicensed data usage in commercial models, emphasizing the importance of legal compliance and ethical data practices in AI training. As the regulatory landscape for AI continues to evolve, it is crucial for researchers and practitioners in the computer vision sector to adhere to best practices and ensure the long-term sustainability of their solutions.

In conclusion, the introduction of FCVG represents a significant advancement in generative video technology, offering a new perspective on video interpolation and frame generation. By leveraging frame-wise conditions and innovative methodologies, FCVG paves the way for future developments in the field of computer vision and sets a new standard for high-quality video production. Mengatasi Kesenjangan dalam Video Generatif dengan FCVG

Generative Video merupakan salah satu teknologi yang sedang berkembang pesat dalam dunia komputasi visual. Salah satu konsep yang menjadi sorotan dalam generative video adalah kemampuan untuk menghasilkan animasi yang konsisten dan realistis. Salah satu teknologi yang berperan penting dalam mencapai hal ini adalah FCVG, atau Frame Conditioned Video Generation.

Dalam FCVG, kerja sama antara warna yang sesuai sangat penting untuk menjaga konsistensi konten saat animasi berkembang. Proses ini melibatkan penerapan kondisi frame secara detail ke dalam model SVD, yang merupakan salah satu teknologi terkini dalam bidang generative video. Metode yang dikembangkan untuk inisiatif ControlNeXt digunakan dalam proses ini, di mana kondisi kontrol awalnya dienkripsi oleh beberapa blok ResNet sebelum dilakukan normalisasi silang antara kondisi dan cabang SVD dari alur kerja.

Sejumlah kecil video digunakan untuk menyesuaikan model SVD, dengan sebagian besar parameter model dibekukan. Hal ini menghasilkan hasil yang menguntungkan dalam sebagian besar skenario, dengan penggabungan rata-rata sederhana tanpa re-injeksi noise yang memadai dalam FCVG.

Untuk menguji sistem ini, para peneliti menyusun dataset yang mencakup berbagai adegan termasuk lingkungan luar, pose manusia, dan lokasi interior, termasuk gerakan seperti pergerakan kamera, gerakan tari, dan ekspresi wajah, antara lain. Koleksi ini dipisahkan 4:1 antara penyesuaian halus dan pengujian. Berbagai metrik digunakan untuk evaluasi, seperti Learned Perceptual Similarity Metrics (LPIPS), Fréchet Inception Distance (FID), Fréchet Video Distance (FVD), VBench, dan Fréchet Video Motion Distance.

Dalam pengujian kuantitatif, FCVG terbukti unggul dibandingkan dengan pendekatan sebelumnya, dengan peningkatan kinerja yang signifikan di semua metrik. Pengujian kualitatif juga menunjukkan bahwa FCVG mampu memberikan hasil yang memuaskan dalam berbagai skenario, termasuk dalam kasus-kasus yang kompleks seperti gerakan manusia dan objek.

Secara keseluruhan, FCVG mewakili setidaknya peningkatan bertahap dalam teknologi interpolasi frame dalam konteks non-paten. Kode untuk proyek ini telah dibuat tersedia di GitHub oleh para peneliti, meskipun dataset terkait belum dirilis pada saat penulisan artikel ini.

Dengan kemajuan teknologi generative video seperti FCVG, diharapkan akan terus terjadi perkembangan yang signifikan dalam bidang ini. Meskipun masih ada beberapa keterbatasan, namun potensi dan manfaat dari teknologi ini sangat besar dan dapat memberikan kontribusi yang berarti dalam berbagai aplikasi di masa depan. Semoga artikel ini dapat memberikan gambaran yang jelas tentang pentingnya teknologi generative video dan bagaimana FCVG dapat menjadi salah satu langkah maju dalam mengatasi kesenjangan dalam video generatif.

Tag: Generative Video, FCVG, Interpolasi Frame

Gambar:

Sumber:

Unite AI

Penulis:

Tim Unite AI Generative video is a form of video art that is created using algorithms and computer programs to generate moving images. This form of art blurs the line between the digital and physical worlds, creating mesmerizing visuals that are constantly evolving and changing. One of the challenges in creating generative video is bridging the ‘space between’ – the gap between the artist’s vision and the final output.

Generative video artists often start with a concept or idea that they want to explore, whether it be a particular theme, emotion, or aesthetic. They then use programming languages such as Processing or Max/MSP to create algorithms that will generate the visuals based on their input. This process involves a lot of trial and error, as the artist must fine-tune the algorithms to achieve the desired result.

The ‘space between’ in generative video refers to the gap between the artist’s intention and the final output. This gap can be bridged through careful experimentation, iteration, and refinement of the algorithms. Artists must constantly iterate on their code, making adjustments and tweaks to fine-tune the visuals and bring them closer to their original vision.

One of the key challenges in bridging this gap is finding a balance between control and randomness. Generative video relies on algorithms to generate visuals, which can sometimes result in unexpected or unpredictable outcomes. Artists must strike a balance between guiding the algorithms to achieve their vision while also allowing for serendipitous discoveries and surprises.

Another challenge in generative video is maintaining a sense of coherence and narrative. Unlike traditional video production, generative video does not follow a linear timeline or storyboard. Instead, the visuals are constantly evolving and changing in response to the algorithms. Artists must find ways to create a sense of cohesion and flow in their work, guiding the viewer through the ever-changing imagery.

Despite these challenges, bridging the ‘space between’ in generative video can lead to incredibly unique and captivating visuals. By experimenting with algorithms, exploring new techniques, and pushing the boundaries of what is possible, artists can create immersive and dynamic video art that challenges traditional notions of storytelling and visual communication. Through careful iteration and refinement, artists can bridge the gap between their vision and the final output, creating a truly innovative and mesmerizing experience for viewers.